conformable Facial Code Extrapolation Sensor (cFaCES)

by Farita Tasnim, David Sadat, and Canan Dagdeviren

Decoding of facial strains via conformable piezoelectric interfaces

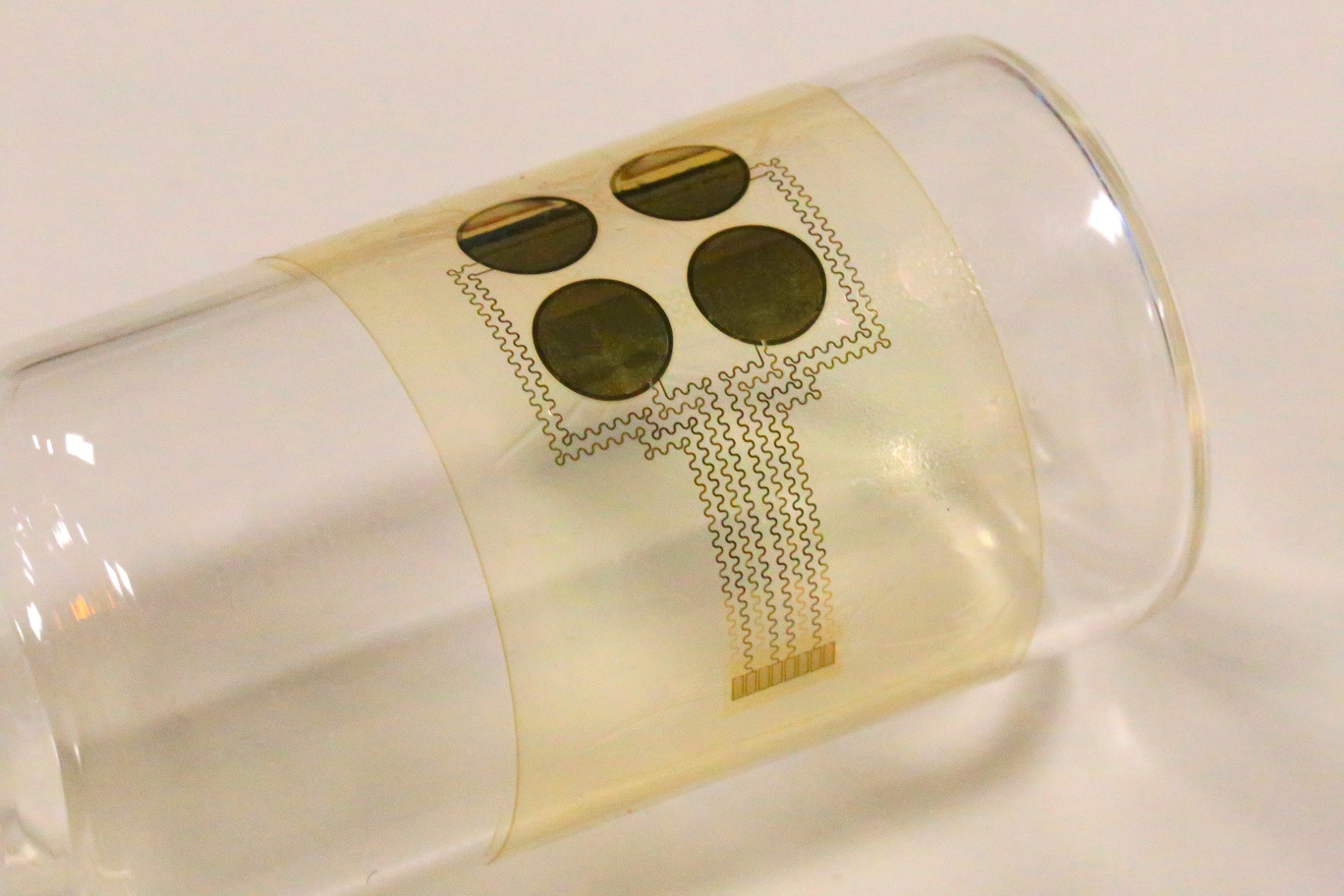

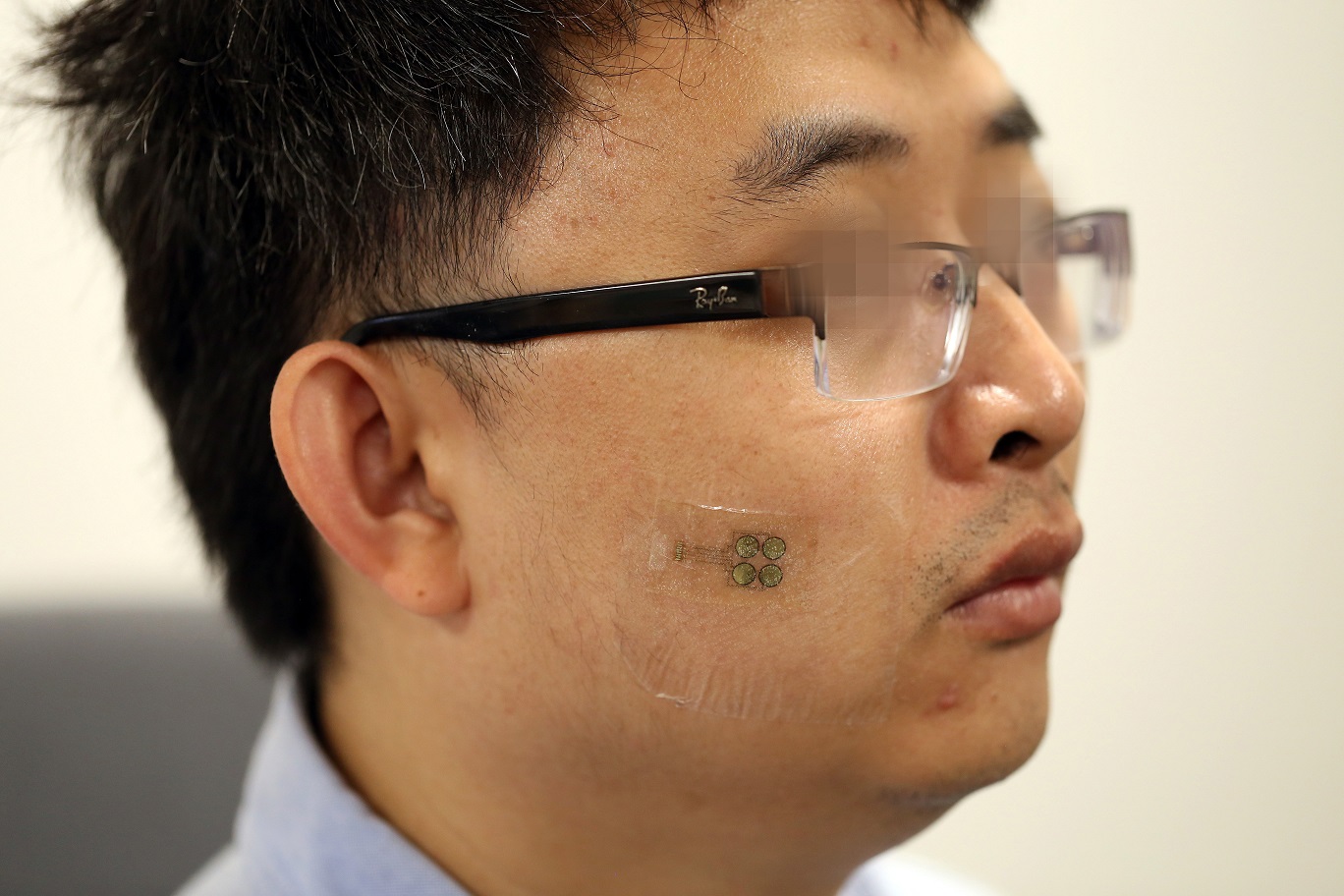

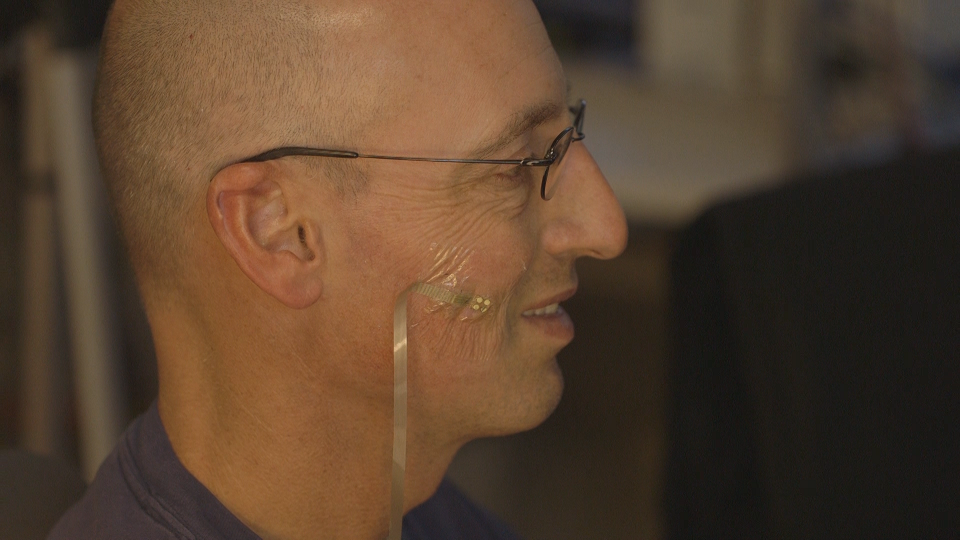

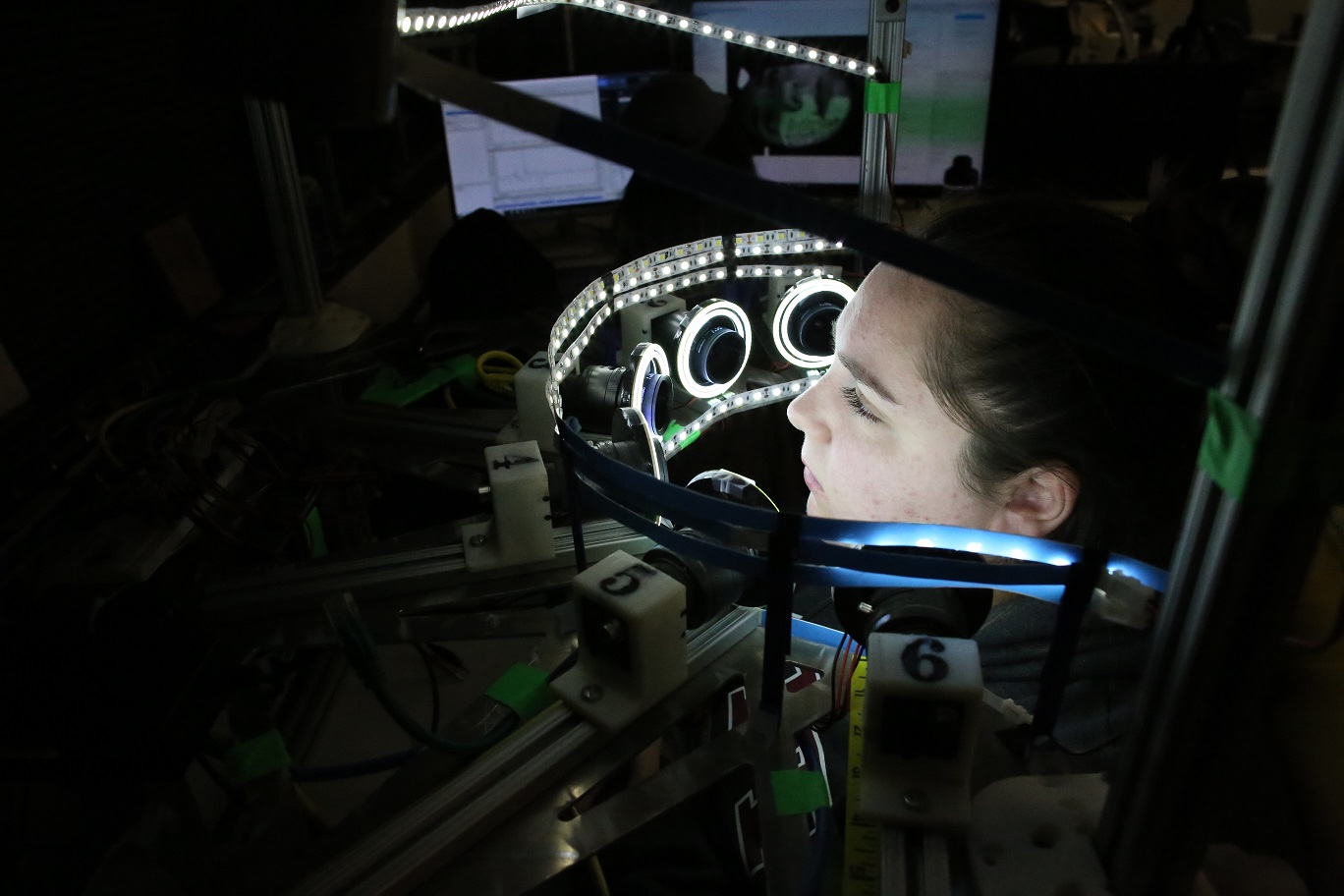

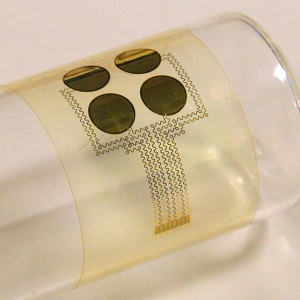

Devices that facilitate nonverbal communication typically require high computational loads or have rigid and bulky form factors that are unsuitable for use on the face or on other curvilinear body surfaces. Here, we report the design and pilot testing of an integrated system for decoding facial strains and for predicting facial kinematics.

The system consists of mass-manufacturable, conformable piezoelectric thin films for strain mapping; multiphysics modelling for analysing the nonlinear mechanical interactions between the conformable device and the epidermis; and three-dimensional digital image correlation for reconstructing soft-tissue surfaces under dynamic deformations as well as for informing device design and placement. In healthy individuals and in patients with amyotrophic lateral sclerosis (ALS), we show that the piezoelectric thin films, coupled with algorithms for the real-time detection and classification of distinct skin-deformation signatures, enable the reliable decoding of facial movements. The integrated system could be adapted for use in clinical settings as a nonverbal communication technology or for use in the monitoring of neuromuscular conditions.

Related Publication

Decoding of facial strains via conformable piezoelectric interfaces

Contributors: Farita Tasnim, David Sadat, Canan Dagdeviren